Smart City Intersections

Smart City Intersections

Dense urban environments present unique challenges to the potential deployment of autonomous vehicles, including a large number of vehicles moving at various speeds, obstructions which are opaque to in-vehicle sensors, and chaotic behavior of pedestrians. Safety of pedestrians can not be compromised, and personal privacy must be preserved. Smart city intersections will be at the core of an AI-powered citizen-friendly traffic management system for crowded metropolises. COSMOS will provide components needed for developing smart intersections which support cloud-connected vehicles to overcome the limitations of autonomous vehicles. In particular, COSMOS will enable vehicles to wirelessly share in-vehicle sensor data with other vehicles and the edge cloud servers. COSMOS will also deploy a variety of infrastructure sensors, including street-level and bird’s eye cameras, whose data will be aggregated by the servers. The servers will run real-time AI-based algorithms to monitor and manage traffic.

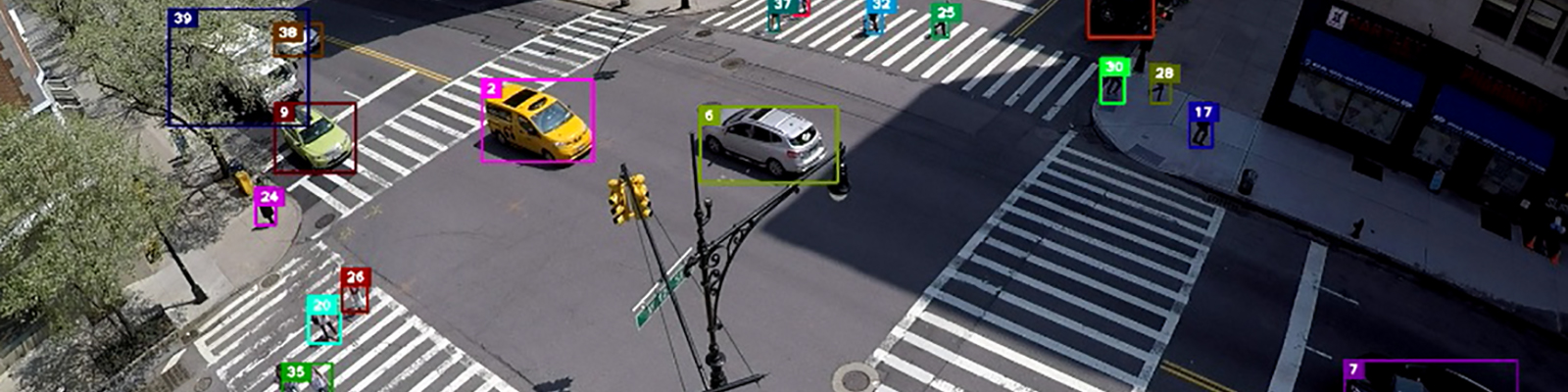

We are conducting research on a number of smart intersection use cases, and executing the experiments at the COSMOS pilot location. Video 1 demonstrates object detection at the pilot site located at corner of 120th street and Amsterdam Avenue. Video 2 shows a scaled model of the pilot site intersection, which can facilitate “physical twin” experimentation.

Video 1. Vehicle and pedestrian object detection at COSMOS pilot site located at the corner of 120th street and Amsterdam Avenue, New York City.

Video 2. Emulator of the pilot intersection – scaled model of the intersection which can facilitate “physical twin” experimentation.

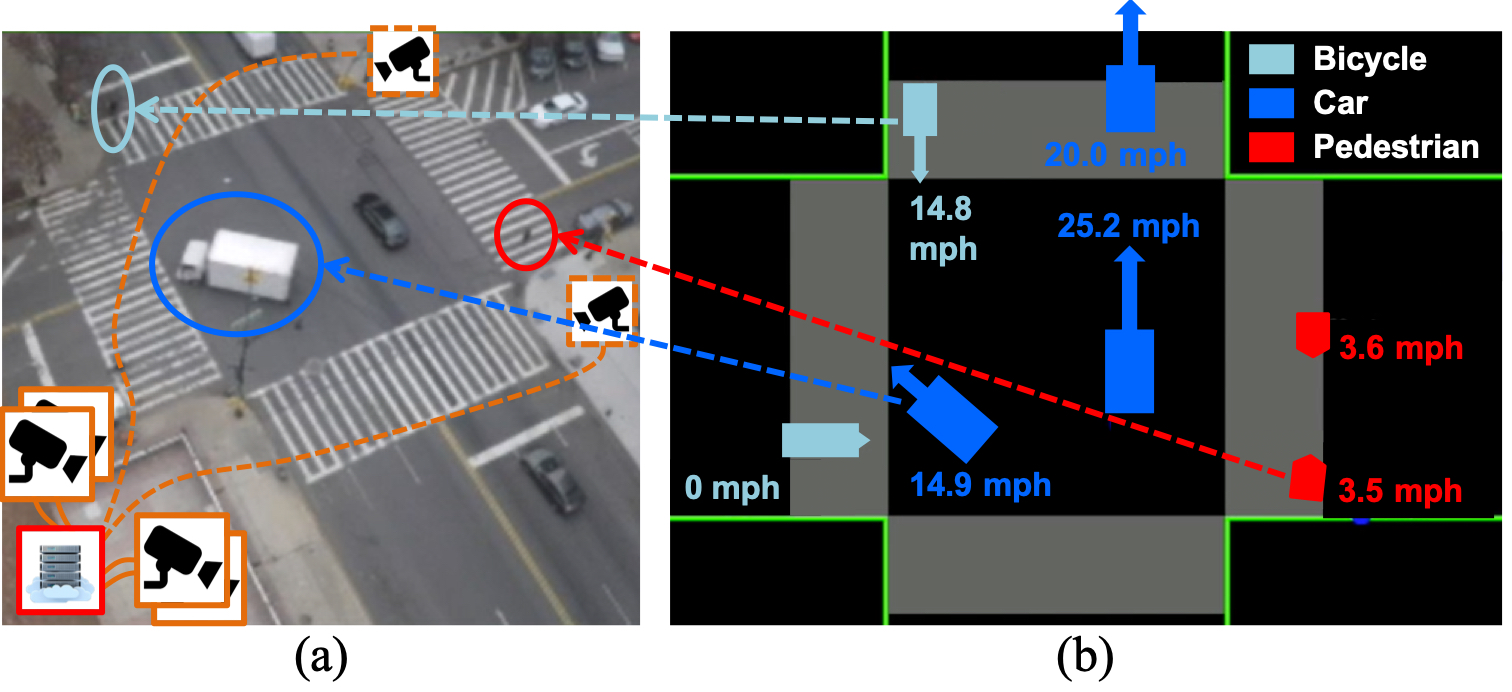

To facilitate efficient movement of vehicles and distribution of emergency information to pedestrians, we devised the “radar screen” system and experiment. The system is envisioned to provide real-time evolving snapshots of velocity vectors of all objects in the intersection. The radar screen is composed by AI learning algorithms dynamically distributed across edge and cloud computing resources based on latency requirements and available communications bandwidth. The radar screen will be be wirelessly broadcast to participants in the intersection within a time that can support safety critical applications. Results of the experiments using bird’s eye cameras to detect and track vehicles and pedestrians from the COSMOS pilot site are reported in [1], where we report the capabilities for real-time computation and detection and tracking accuracy by evaluating and customizing video pre-processing and deep-learning algorithms. Figure 1 illustrates the “radar screen” use case.

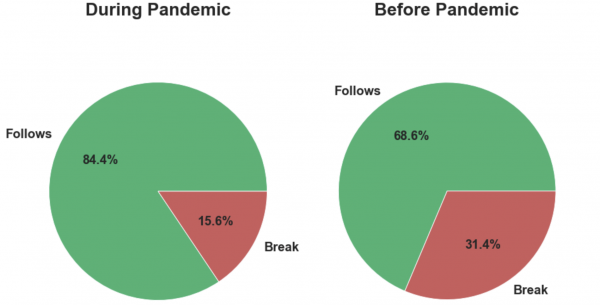

A set of experiments was devised where the cameras are used to assess compliance with social distancing policies during the COVID-19 pandemic. Social distancing can reduce infection rates in respiratory pandemics such as COVID-19, especially in dense urban areas. Hence, we used the PAWR COSMOS wireless edge-cloud testbed to design and evaluate two different approaches for social distancing analysis. The first, Automated video-based Social Distancing Analyzer (Auto-SDA) [2, 3,4], was designed to measure pedestrians compliance with social distancing protocols using street-level cameras. Since using street-level cameras can raise pedestrian privacy concerns, we also developed the Bird’s eye view Social Distancing Analyzer (B-SDA) [4,6,7] which uses bird’s eye view cameras, thereby preserving pedestrians’ privacy. Both Auto-SDA and B-SDA consist of multiple modules. The papers document the roles of processing modules and the overall performance in evaluating the compliance of pedestrians with social distancing protocols. We demonstrate Auto-SDA and B-SDA on videos recorded from cameras deployed on the 2nd and 12th floor of Columbia’s Mudd building, respectively. Figure 2 illustrates compliant (green circles) and non-compliant (red circles) pedestrian groups, as deduced by social distancing compliance algorithms. Figure 3 illustrates that pedestrians have been adhering to social distancing rules during the COVID pandemic notably more than before the pandemic.

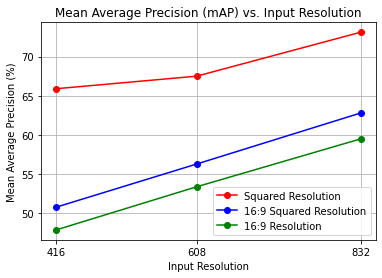

A critical component in designing an effective real-time object detection/tracking pipeline is the understanding of how object density, i.e., the number of objects in a scene, and image-resolution and frame rate influence the performance metrics. We devised techniques [5] to explore the accuracy and speed metrics in support of pipelines that meet the precision and latency needs of a real-time environment. We examined the impact of varying image-resolution, frame rate and object-density on the object detection performance metrics. The experiments on the pilot site show that varying the frame resolution from 416×416 pixels to 832×832 pixels, and cropping the images to a square resolution, result in the systematic increase in average precision for all object classes. Decreasing the frame rate from 15fps to 5 fps preserves more than 90% of the highest F1-score achieved for all object classes. The results inform the choice of video preprocessing stages, modifications to established AI-based object detection/tracking methods, and suggest optimal hyper-parameter values. Figure 4 illustrates that mean average precision [mAP] increases in piecewise-linear fashion with an increase in resolution from 416×416 to 832×832 pixels.

When collecting real-time images and videos of public spaces, street-level cameras inadvertently capture sensitive information such as pedestrian faces and license plates. To avoid compromising pedestrian and driver privacy at COSMOS sites, we created algorithms and generated a pipeline to systematically blur faces of pedestrians and license plates of vehicles. We customized YOLOv4 object detection models and trained them using ground floor intersection video dataset. We are able to automatically and robustly blur about 99% of visible faces and license plates. Figure 5 illustrates the results of privacy-preserving blurring operation on faces and license plates.

[1] Shiyun Yang, Emily Bailey, Zhengye Yang, Jonatan Ostrometzky, Gil Zussman, Ivan Seskar, Zoran Kostic, “COSMOS Smart Intersection: Edge Compute and Communications for Bird’s Eye Object Tracking,” IEEE Percom – SmartEdge 2020, 4th International Workshop on Smart Edge Computing and Networking, Mar. 2020. [download]

[2] M. Ghasemi, “Auto-SDA: Automated video-based social distancing analyzer,” ACM SIGMETRICS Performance Evaluation Review, vol. 49, no. 2, pp. 69–71, Sep. 2021. [download] ACM SIGMETRICS’21 SRC finalist

[3] M. Ghasemi, Z. Kostic, J. Ghaderi, and G. Zussman, “Auto-SDA: Automated Video-based Social Distancing Analyzer,” in Proc. 3rd Workshop on Hot Topics in Video Analytics and Intelligent Edges (HotEdgeVideo’21), 2021. [download] [presentation]

[4] M. Ghasemi, Z. Yang, M. Sun, H. Ye, Z. Xiong, J. Ghaderi, Z. Kostic, and G. Zussman, “Demo: Video-based social distancing evaluation in the COSMOS testbed pilot site,” in Proc. ACM MOBICOM’21, 2021. [download] [poster]

[5] Zhuoxu Duan, Zhengye Yang, Richard Samoilenko, Dwiref Snehal Oza, Ashvin Jagadeesan, Mingfei Sun, Hongzhe Ye, Zihao Xiong, Gil Zussman, Zoran Kostic,” Smart City Traffic Intersection: Impact of Video Quality and Scene Complexity on Precision and Inference,” in Proc. 19th IEEE International Conference on Smart City, Dec. 2021. [download]

[6] Z. Yang, M. Sun, H. Ye, Z. Xiong, G. Zussman, and Z. Kostic, “Birds eye view social distancing analysis system,” arXiv:2112.07159 [cs.CV], Dec. 2021. [download]

[7] Z. Yang, M. Sun, H. Ye, Z. Xiong, G. Zussman, and Z. Kostic, “Bird’s-eye View Social Distancing Analysis System,” in Proc. IEEE ICC 2022 Workshop on Edge Learning for 5G Mobile Networks and Beyond, May 2022. [download]